more about Pandas入门 DataFrame的常用函数

Prepping Data

Let’s download, import and clean our primary Canadian Immigration dataset using pandas read_excel() method for any visualization.

1 | df_can = pd.read_excel('https://cf-courses-data.s3.us.cloud-object-storage.appdomain.cloud/IBMDeveloperSkillsNetwork-DV0101EN-SkillsNetwork/Data%20Files/Canada.xlsx', |

Word Clouds

Word clouds (also known as text clouds or tag clouds) work in a simple way: the more a specific word appears in a source of textual data (such as a speech, blog post, or database), the bigger and bolder it appears in the word cloud.

Luckily, a Python package already exists in Python for generating word clouds. The package, called word_cloud was developed by Andreas Mueller. You can learn more about the package by following this link.

Let’s use this package to learn how to generate a word cloud for a given text document.

First, let’s install the package.

1 | # install wordcloud |

Word clouds are commonly used to perform high-level analysis and visualization of text data. Accordinly, let’s digress from the immigration dataset and work with an example that involves analyzing text data. Let’s try to analyze a short novel written by Lewis Carroll titled Alice’s Adventures in Wonderland. Let’s go ahead and download a .txt file of the novel.

1 | # download file and save as alice_novel.txt |

File downloaded and saved!Next, let’s use the stopwords that we imported from word_cloud. We use the function set to remove any redundant stopwords.

1 | stopwords = set(STOPWORDS) |

Create a word cloud object and generate a word cloud. For simplicity, let’s generate a word cloud using only the first 2000 words in the novel.

1 | # instantiate a word cloud object |

Awesome! Now that the word cloud is created, let’s visualize it.

1 | # display the word cloud |

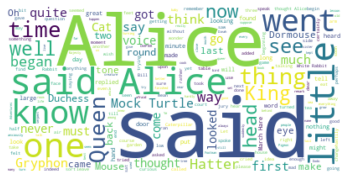

Interesting! So in the first 2000 words in the novel, the most common words are Alice, said, little, Queen, and so on. Let’s resize the cloud so that we can see the less frequent words a little better.

1 | fig = plt.figure() |

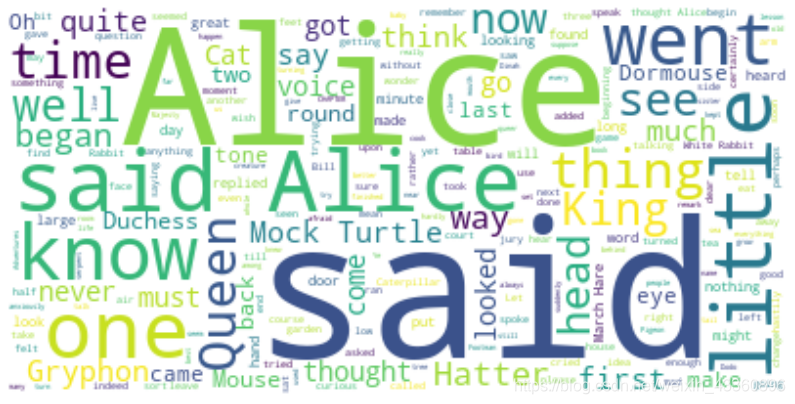

Much better! However, said isn’t really an informative word. So let’s add it to our stopwords and re-generate the cloud.

1 | stopwords.add('said') # add the words said to stopwords |

Excellent! This looks really interesting! Another cool thing you can implement with the word_cloud package is superimposing the words onto a mask of any shape. Let’s use a mask of Alice and her rabbit. We already created the mask for you, so let’s go ahead and download it and call it _alice_mask.png_.

1 | # download image |

Image downloaded and saved!Let’s take a look at how the mask looks like.

1 | fig = plt.figure() |

Shaping the word cloud according to the mask is straightforward using word_cloud package. For simplicity, we will continue using the first 2000 words in the novel.

1 | # instantiate a word cloud object |

Really impressive!

Unfortunately, our immmigration data does not have any text data, but where there is a will there is a way. Let’s generate sample text data from our immigration dataset, say text data of 90 words.

what was the total immigration from 1980 to 2013?

1 | total_immigration = df_can['Total'].sum() |

6409153Using countries with single-word names, let’s duplicate each country’s name based on how much they contribute to the total immigration.

1 | max_words = 90 |

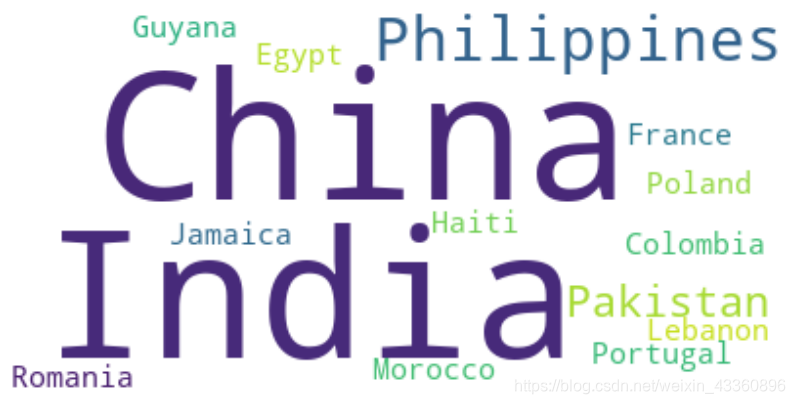

'China China China China China China China China China Colombia Egypt France Guyana Haiti India India India India India India India India India Jamaica Lebanon Morocco Pakistan Pakistan Pakistan Philippines Philippines Philippines Philippines Philippines Philippines Philippines Poland Portugal Romania 'We are not dealing with any stopwords here, so there is no need to pass them when creating the word cloud.

1 | # create the word cloud |

Word cloud created!1 | # display the cloud |

According to the above word cloud, it looks like the majority of the people who immigrated came from one of 15 countries that are displayed by the word cloud. One cool visual that you could build, is perhaps using the map of Canada and a mask and superimposing the word cloud on top of the map of Canada. That would be an interesting visual to build!